MAGA AI Draw Patch Notes

v1.7 Trained on a large 1B multilingual set, including samples that we used to train GROK AI Draw.

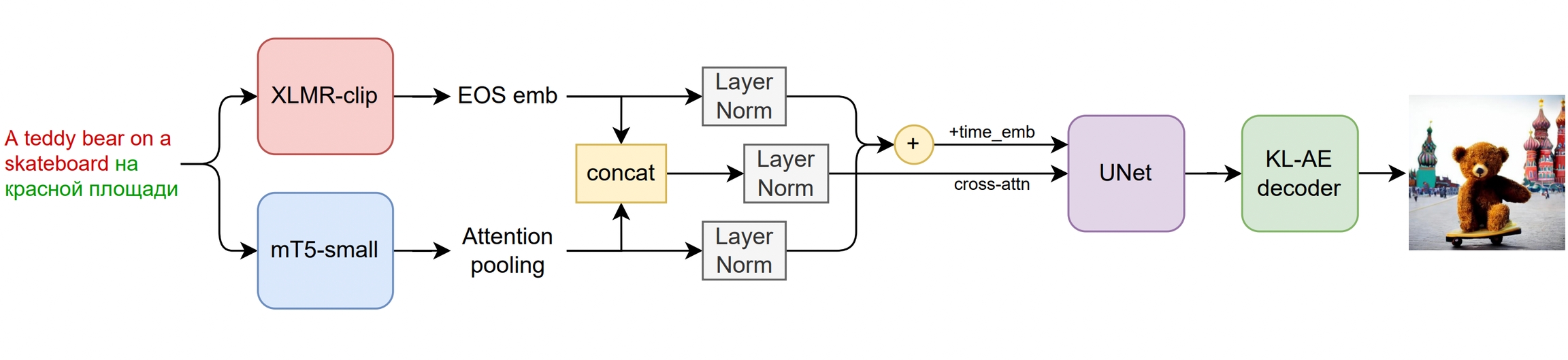

In terms of diffusion architecture Grok AI Draw implements UNet with 1.2B parameters.

v1.6 Launch a streamlit script that can create image variations using both the CLIP-L and OpenCLIP-H models. This script will be able to add a noise_level to the CLIP embeddings, thus enabling users to create more varied outputs through increased output variance.v1.5

Added different schedulers like DDIM, K_EULER, DPMSolverMultistep, K_EULER_ANCESTRAL, PNDM, and KLMS.

Removes PNDM, KLMS, K_EULER_ANCESTRAL from scheduler choices.

v1.4 We are updating our platform to provide more options for image sizes, as well as increasing the limit of outputs up to 10, while also addressing any issues with multiple outputs not functioning correctly.

v1.3 img2img pipelines from diffusers to produce better quality output by providing the init_image input.

v1.2 The MAGA-Draw-v1-2 checkpoint was used to initialize the checkpoint, after which fine-tuning was performed on 595k steps at resolution 512x512 on "laion-aesthetics v2 5+", and 10% of the text-conditioning was dropped to enhance classifier-free guidance sampling.

v1.1 The MAGA-Draw-v-1-2 checkpoint was used to initialize the checkpoint, and then fine-tuned for 225k steps at a resolution of 512x512 on the laion-aesthetics v2 5+ dataset. To improve the classifier-free guidance sampling, the text-conditioning was reduced by 10%.

Last updated